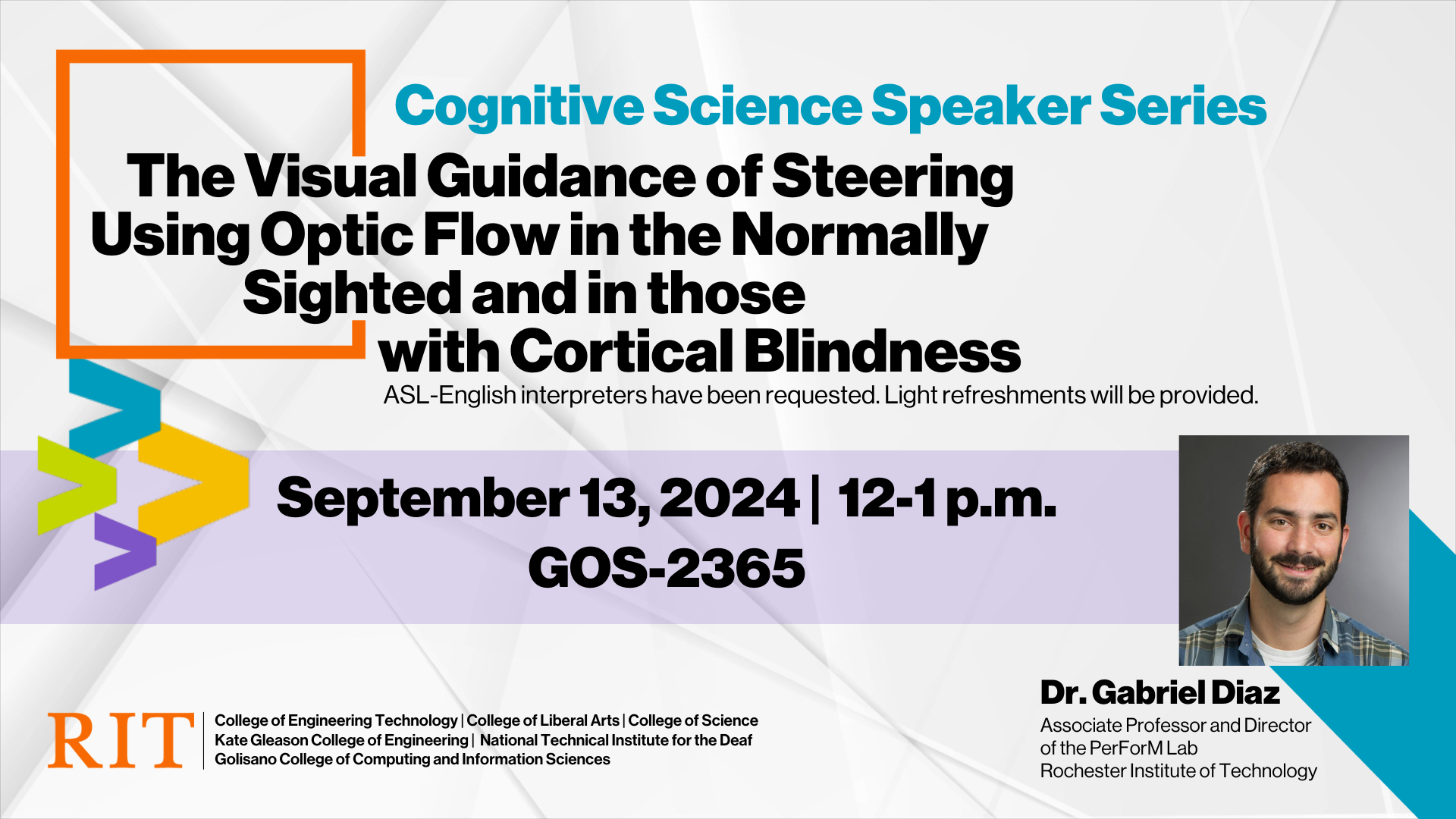

Cog Sci Speaker Series: Visual Guidance of Steering Using Optic Flow

Presenter: Dr. Gabriel Diaz

Title: The Visual Guidance of Steering Using Optic Flow in the Normally Sighted and in those with Cortical Blindness

Abstract: The visual guidance of steering is thought to rely significantly on the patterns of motion that arise during translation through a stable world environment, commonly referred to as optic flow (OF). The OF patterns that arise during movement are correlated in time, and across the visual field, and heading judgements can be accurate using only a portion of the flow field (Warren and Kurtz 1992). Nevertheless, patients with stroke-induced damage to the visual cortex and consequently experience blind fields over a 1/4 to 1/2 of the visual field demonstrate greater biases in lane keeping than visually-intact controls (Bowers et al. 2010). One possible explanation for these biases is that the “blind” region acts as a source of noise that biases perception of heading during steering - a reasonable explanation when one considers that the affected region plays a crucial role in the early stages of motion processing. To test this hypothesis, we have conducted a series of studies in which a group of visually-intact controls and CB patients immersed in virtual reality are asked to maintain a center-lane position while traveling at 19 m/s on a procedurally generated one lane road. Turn direction (left/right) and turn radius (35, 55, or 75 m) were systematically manipulated between turns. Additionally, optic flow density was indirectly manipulated through variation in environmental texture density (low, medium, high). In this talk, I will present insights learned from this ongoing work into the visual guidance of steering, the effects of cortical blindness, the contributions from gaze behavior, and our (very) early efforts to model the relationship between visual information and steering behavior using biologically informed neural networks.

Bio: Gabriel Diaz received his doctoral degrees in Cognitive Science from Rensselaer Polytechnic University, where he worked with Brett R. Fajen. From 2010-2013, he worked as a post-doc with Mary Hayhoe at UT Austin, and in August of 2013, Gabriel joined the faculty at the Rochester Institute of Technology’s Center for Imaging Science, where he is now an Associate Professor and Director of the PerForM Lab (Perception for Movement). PerForM draws on Dr. Diaz’s prior experience in the use of virtual reality, motion capture, eye tracking, and machine learning to study the role of eye and head movements in guiding behavior in natural and simulated environments. Basic research is conducted in parallel with industry-funded and applied work on the development of new technologies to improve the accuracy and precision of mobile and VR/AR-integrated eye tracking technology.

Event Snapshot

When and Where

Who

Open to the Public

| Cost | FREE |

Interpreter Requested?

Yes