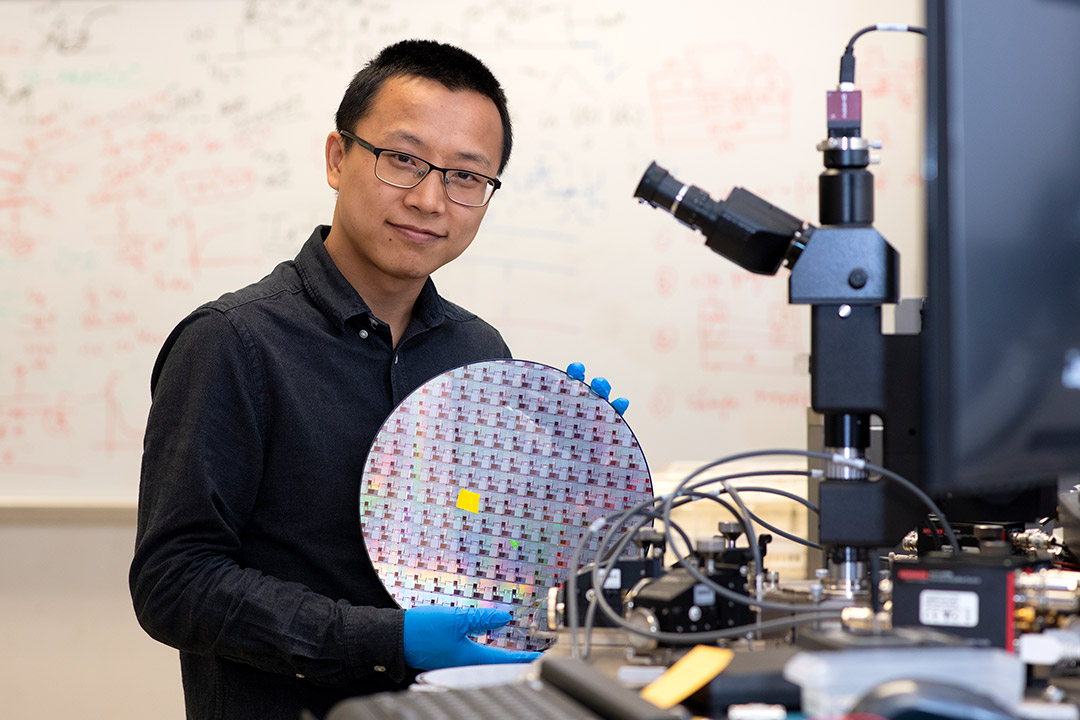

Microelectronic engineering professor developing options for improving memory technologies for storage and computing

Work by Kai Ni honored with DARPA Young Faculty Award toward advancing memory technologies

A. Sue Weisler

Engineering faculty-researcher Kai Ni was awarded funding to improve computing memory technologies through DARPA’s Young Faculty Award Program.

Research at Rochester Institute of Technology into new energy-efficient materials for computing could improve the bottleneck that often occurs when retrieving large amounts of data, hindering processing throughput and energy efficiency.

Kai Ni, assistant professor of electrical engineering in RIT’s Kate Gleason College of Engineering, received funding to advance alternative materials and processes toward improving how electronic devices store, retrieve, and process big data. The funding is part of the Defense Advanced Research Projects Agency’s (DARPA) Young Faculty Award Program, given to early career faculty-researchers exploring technologies in the areas of national security.

Ni is an expert in the field of semiconductors and novel computing memory technologies. His work is in the area of ferroelectrics, an emerging field where energy efficiency and increased computing power is being developed through integration of new materials, the physical modeling of devices, and more effective application of the developed devices into accelerator system architectures—the overall computer processing systems.

In order to compute or to extract data, users must move data from the memory to the computing device’s applications. Once completing functions, data is re-stored. That movement, especially of large data sets, becomes a data transfer bottleneck, Ni explained.

“The challenge is memory. Basically, it takes more energy and time to fetch the data from the memory to CPU, so moving the data from storage consumes the most energy; that is a bottleneck we are trying to solve,” said Ni, director of the NanoElectronic Devices and Systems Group in RIT’s Department of Electrical and Microelectronic Engineering. “That is really the goal we are trying to achieve, and it is not easy.”

Ni was awarded nearly $1 million for this project. Trends in this research area are showing promise of more energy efficient processing, and work is aimed at confirming use of ferroelectric materials to accelerate applications, such as processing massive amounts of big data and to improve accelerators for artificial intelligence and neuromorphic computing technology development. These technologies are being sought for improvements to data and signal process, secure coding, and resource management in defense applications, but they are also being developed across industries from health care to consumer devices.

“Research to advance the use of novel materials in practical and scalable microelectronic devices is critically needed to achieve the performance required for next-generation computing,” said Doreen Edwards, dean of RIT’s Kate Gleason College of Engineering. “Not only will Dr. Ni’s research help develop new technologies, it will help educate the next generation of microelectronic and microsystems engineers at RIT.”

Ferroelectric substances, such as perovskite, a semiconductor material, have only recently become a key focus of research for optoelectronic devices and technologies. They can be used to make transducers or capacitors; however, some of the materials are not easily integrated without special processing—specifically the ability to scale smaller, especially with the increased power demands in today’s devices. Ni’s early findings indicate that leveraging the use of new materials such as hafnium oxide, an electrical insulator, found to be ferroelectric, can improve processing and lead to the needed integration of memory and logic functions of a computer.

Although there have been breakthroughs in the field using it, Ni said there are challenges to refine its integration into semiconductor devices. While it has the electric properties needed for improving application processing, the scaling process in a small area of a transistor is key to developing and improving memory. It is a unique quality of the ferroelectric technologies and is being tested extensively in AI chips for the acceleration of neural networks.

“Conventional ways of packaging more transistors and packing more computing resources is no longer sustainable, so how can we keep making computers even more powerful? That is what we are working on,” said Ni. “It is the right time to go to electronics, because there are so many options to explore. We are looking to emulate the brain—how can we do this for more efficient devices? How can we go beyond the 2D chip to make 3D and stack multiple chips together? And how can you build a quantum computer—all of these options are exciting. I like to try new things, and I’d like our group to make contributions to this field.”